VISUAL COGNITION

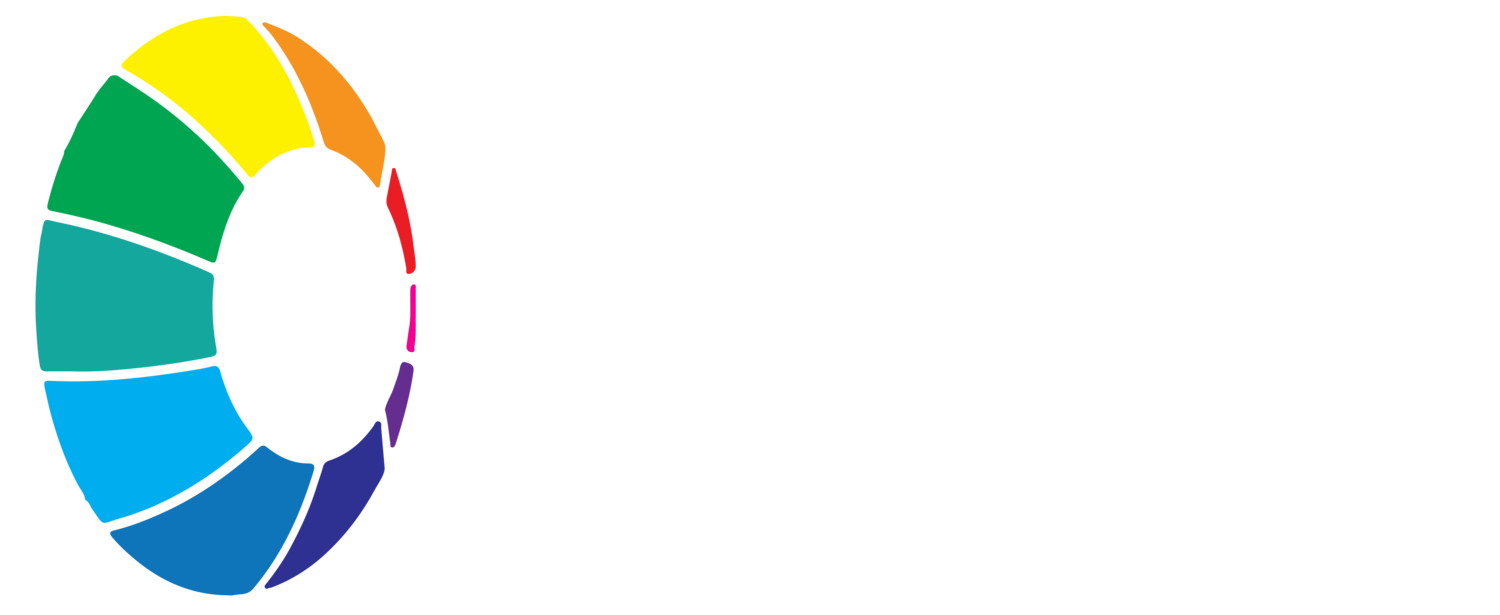

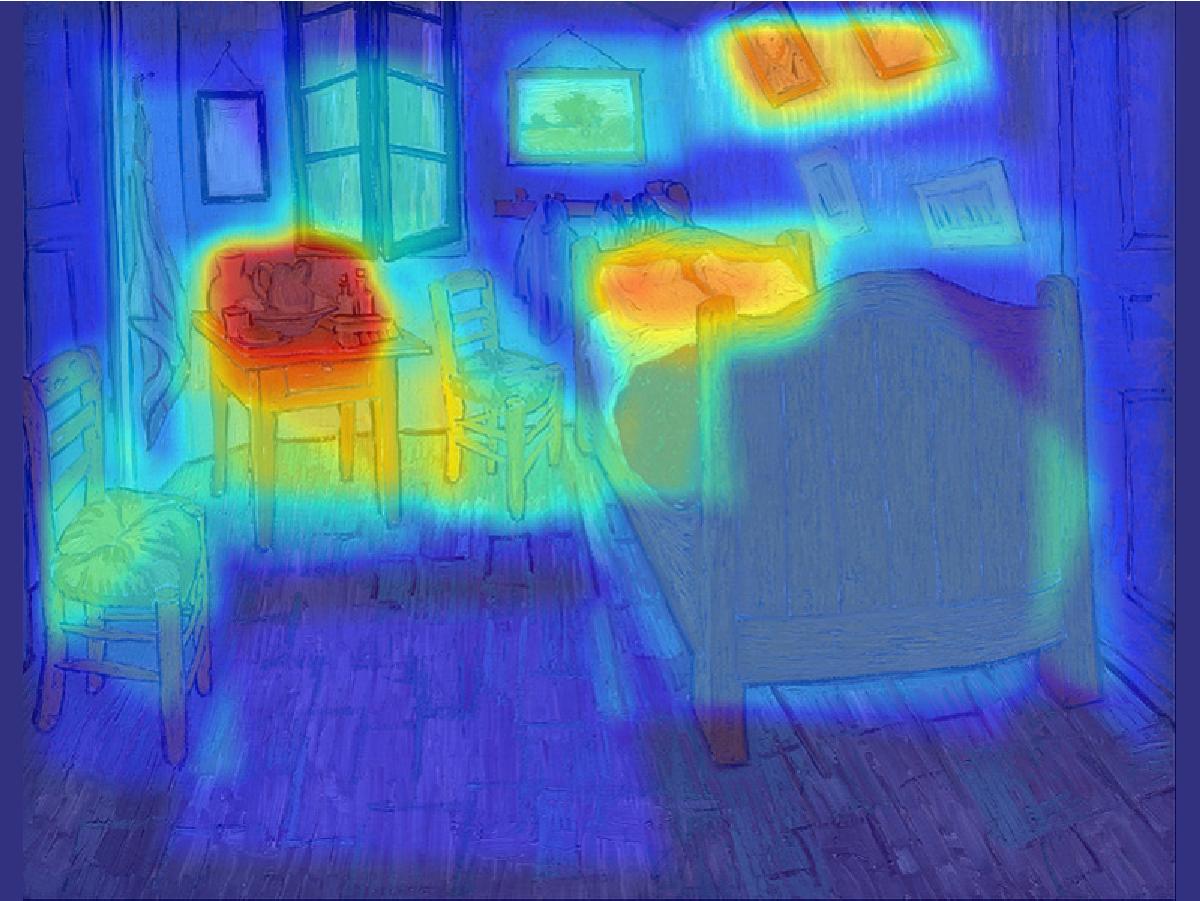

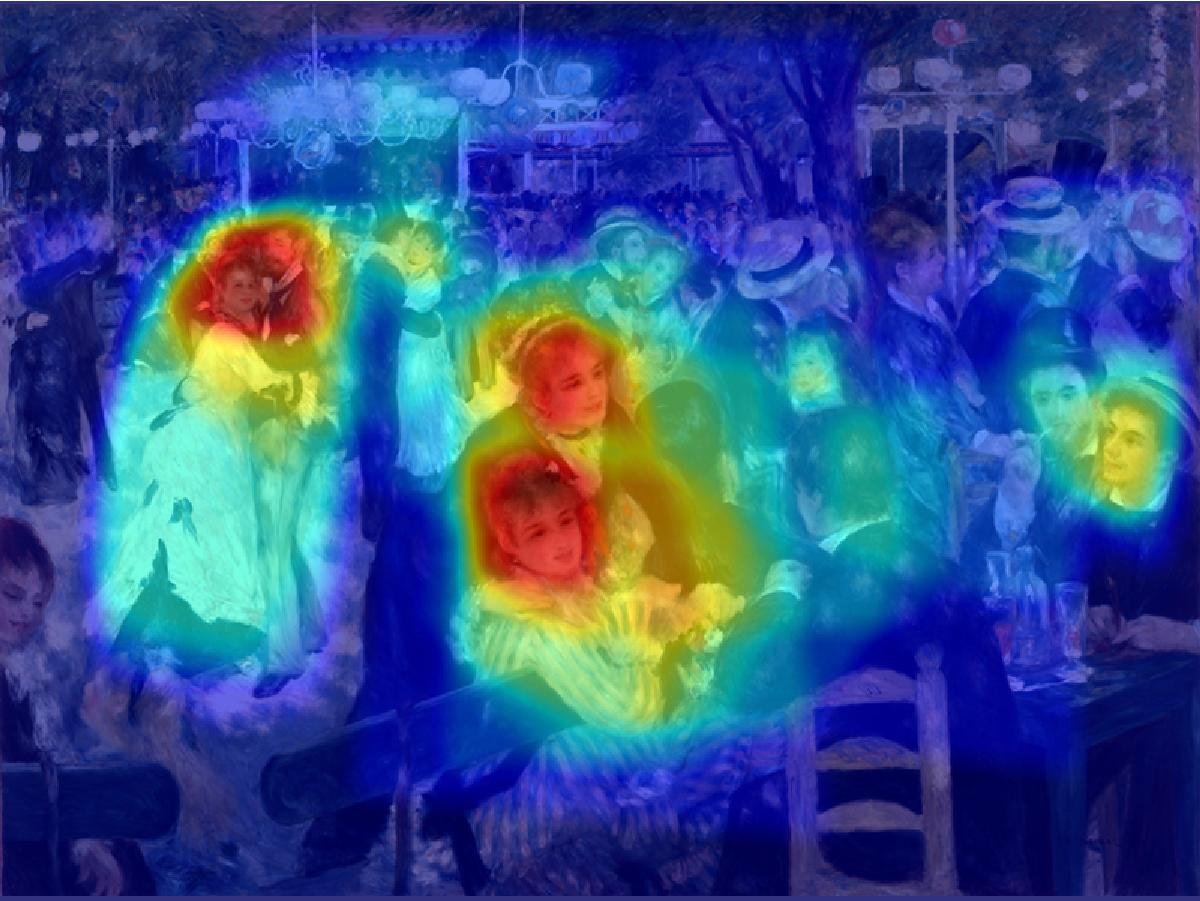

In the Queen’s Visual Cognition Laboratory (QVCL), we are interested in how people perceive and process the visual information in their immediate environment (or real-world scenes). We are interested in how people are able to quickly understand the space they are looking at (getting the “scene gist”), how and when people attend to different aspects of the environment, and what type of information is remembered when no longer in that environment. We use behavioural and eye movement experiments, where we change different aspects of the images and task to examine these questions.

Scene Categorization

We examine how cognitive processes work theoretically by disrupting those processes and examining how long it takes to recover. For example, in a series of studies, we examine how scenes that contain more than one possible interpretation (depending on where you are looking) are processed over time and with different tasks (e.g., Castelhano, Therauilt, Fernandes, 2018). Using the slider, you can see what each of the scenes look like when they are normal and when they contain two contradictory categories.

Visual Search

One topic we have explored is how information from objects and scenes are used to search extremely efficiently. Below is example stimuli from one of our studies (Pereira & Castelhano, 2014), where we manipulated the information that is outside of a moving window. This window is normally tied to where a person is looking, but can be viewed here using your mouse.

Can you find these objects in the image below?

1) a lamp 2) a glass 3) a magazine basket

Object Function and Action

We’re also interested in how objects are organized in scenes, and their link to actions and object functions (Castelhano & Witherspoon, 2016). We are testing this using a number of invented objects that don’t exist in the real-world. Using these objects, we can control the type and extent of knowledge people have about the objects and see how this information impacts assumptions about the objects, and in turn, how these assumptions affect attentional control during search.